IMAGE RECOGNITION

Social media has transformed our way of communication and socialization in today’s world. Facebook and twitter are always on the lookout for more information about their users from their users. People eagerly share their information with the public which is used by the media agents to improve their business and services. This information comes from customers in the form of text, image or video. In the age of selfie, capturing every moment in the cell phone is a norm. Be it a private holiday, an earth quake shaking some part of the world or a cyclone blowing the roof over the head, everything is clicked and posted. These images are used as data by social media and researchers for image recognition, also known as computer vision.

Image recognition is the process of detecting and identifying an object or a feature in a digital image or video in order to add value to customers and enterprises. Billions of pictures are being uploaded daily on the internet. These images are identified and analysed to extract useful information. This technology has various applications as shown below. In this blog we will touch upon some of these applications and the techniques used therein.

Text Recognition

e will begin with the technique used to recognise a handwritten number. Machine learning technologies like deep learning can be used to do so. A brief note on AI, ML, DL and ANN before we proceed further. Artificial intelligence (AI) is human intelligence exhibited by machines by training the machines. Whereas Machine Learning (ML) is an approach to achieve artificial intelligence and deep learning is a technique for implementing machine learning. Artificial Neural Network (ANN) is based on the biological neural network. A single neuron will pass a message to another neuron across this network if the sum of weighted input signals from one or more neurons into this particular neuron exceeds a threshold. The condition when the threshold is exceeded and the message is passed along to the next neuron is called as activation1.

There are different ways to recognize images. We will use neural networks to recognize a simple handwritten text, number 8. A very critical requirement for machine learning is data, as much data as possible to train the machine well. A neural network takes numbers as input. An image is represented as a grid of numbers to the computer and these numbers represent how dark each pixel is. The handwritten text of number 8 is represented as below.

This 18x18 pixel image is treated as an array of 324 numbers. These are the 324 input nodes to the neural network as shown below.

The neural network will have two outputs. The first output will predict the likelihood that the image is an ’8’ and the second output will predict the likelihood that it is not an ’8’. The neural network is trained with different handwritten numbers to differentiate between ’8’and not an ’8’. So, when it is fed with an ’8’, it is trained to identify that the probability of it being an ’8’ is 100% and not being an ’8’ is 0%. So, now it can recognize ’8’ but only a particular pattern of 8. If there is a slight change in position or size, it may not recognise it. There are various ways to train it to identify ’8’ in any position and size. Deep neural network technique can be used to do so. To train better, we need more data and with increase in data, the network becomes bigger. This is done by stacking more layers of nodes and this is known as deep neural network. It does so by treating ’8’at the top separately from ’8’ at the bottom of a picture. This is avoided by using another technique called convolutional neural network. All these technologies are evolving rapidly with improved and refined approach to get better output.

Face Recognition

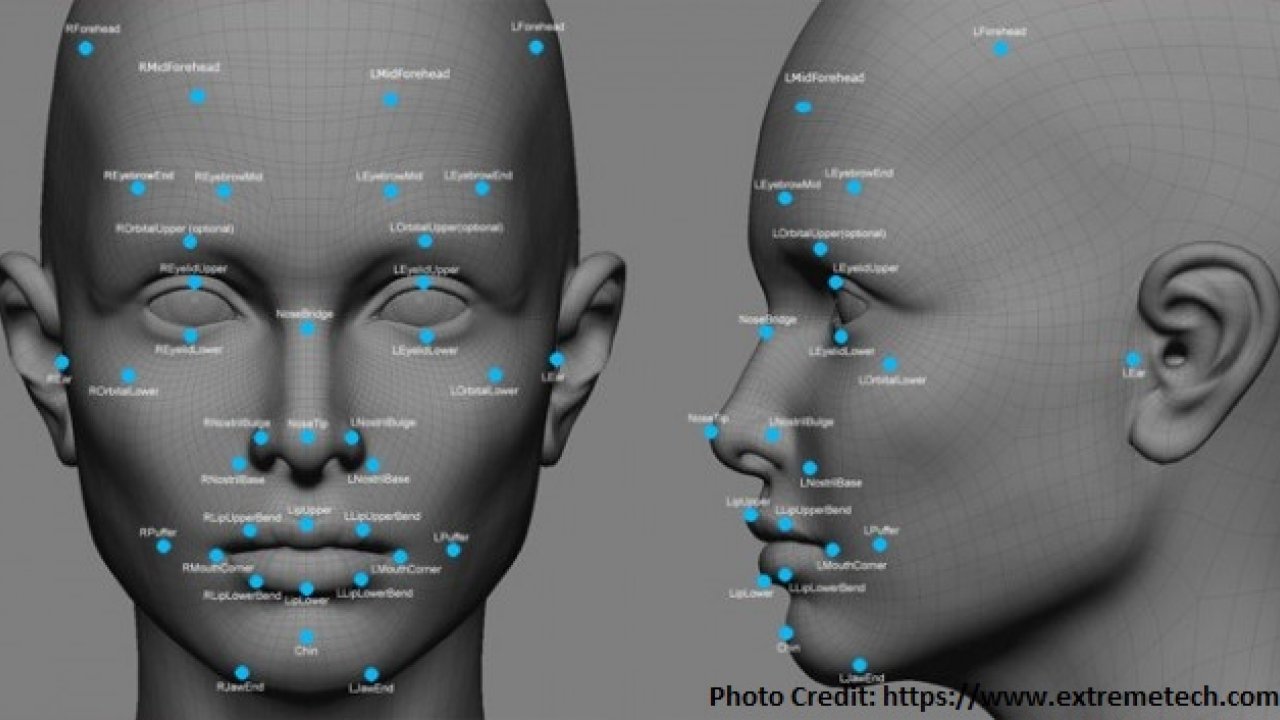

Face recognition is used to convey a person’s identity. It uniquely identifies us. Biometric face recognition technology has applications in various areas including law enforcement and non-law enforcement.

The conventional pipeline of face recognition consists of four stages4

Face detection is easier than face identification as all faces have the same features eyes, ears, nose, and mouth, almost in the same relative positions. Face identification is a lot more difficult as our face is constantly changing, unlike our fingerprints. With every smile, every expression our face gets transformed as the shape of our face contorts with our expression. Though humans can identify us even when we sport a different hairstyle, systems have to be trained to do so. Computers struggle with the problem of A-PIE or aging, pose, illumination, and expression. These are considered as sources of noise which make it difficult to distinguish between faces. A technique called deep learning helps reduce this noise and disclose the statistical features that the images of a single person have in common to uniquely identify that person.

DeepFace is a deep learning facial recognition system created by Facebook. It identifies human faces in digital images and employs a nine-layer neural net with over 120 million connection weights, and was trained on four million images uploaded by more than 4000 Facebook users5. This method reached an accuracy of 97.35%, almost approaching human-level performance.

Computer recognizes faces as collections of lighter and darker pixels. The system first clusters the pixels of a face into elements such as edges that define contours. Subsequent layers of processing combine elements into nonintuitive, statistical features that faces have in common but are different enough to discriminate them. The output of the processing layer below serves as the input to the layer above. The output of deep training the system is a representational model of a human face. The accuracy of the result depends on the amount of data, which in this case is the number of faces the system is trained on.

FBI’s Next Generation Identification (NGI)

FBI’s Criminal Justice Information Services (CJIS) Division developed and incrementally integrated a new system called the Next Generation Identification (NGI) system to replace the Integrated Automated Fingerprint Identification System (IAFIS). NGI provides the criminal justice community with the world’s largest and most efficient electronic repository of biometric and criminal history information6. The accuracy of identification using NGI is much less compared to Facebook’s DeepFace. One of the reasons is the poor quality of pictures that FBI uses. FBI normally uses the images obtained through public cameras which do not provide a face straight-on photograph. Whereas Facebook already has the information of all our friends and works with over 250 billion photos and over 4.4 million labelled faces compared to FBI’s over 50 billion photos. Thus, with more data Facebook has an edge in better identification. Facebook also has more freedom to make mistakes, since a false photo-tag carries much less weight than a mistaken police ID7. Facial recognition is of great use in automatic photo-tagging, but there is risk of false-accept rate while trying to identify a suspect and an innocent could be in trouble because of this.

Search and e-commerce

Google’s Cloud Vision API and Microsoft’s Project Oxford’s Computer Vision, face, and emotion APIs provide image-recognition solutions using deep, machine-learning algorithms to provide powerful ecommerce and retail applications that will enhance shopping experience of users and create new marketing opportunities for retailers8.

Cortexica9 uses its findSimilar™ software to provide services to retailers like Macy’s and Zalando. Cortexica does this by providing the retailer with an API. First, the images of all the items in the inventory are ingested in the software. The size and completeness of the dataset is important. Second, a Key Point Files (KPF) for each image, which is a proprietary Cortexica file, is produced. This file contains all the visual information needed to describe the image and help with future searches. Third, this system is then connected to the customer’s app or website search feature. Fourth, when the consumer sends an incoming query image, it is converted into a KPF, the visual match is computed and the consumer gets the matched results in order of visual similarity in couple of seconds.

This hot topic that is "visual search" is all driven by the alignment of consumer activity, with regards to their propensity to taking pictures, and the innovation of how retailers want their inventory to be discovered by consumers using their mobile devices. Facts like colour, texture, distinctive parts and shapes all need to be considered in designing the algorithm to meet the challenges of the broad range of retail fashion requirements.

Companies like Zugara11 use augmented reality (AR) shopping applications that allow a customer to try clothing in a virtual dressing room by overlaying an image of a dress or shirt and find what suits best. Here the app looks at the shopper via web camera and can capture the emotions of the consumer and send it to Google or Microsoft API for emotional analysis. Depending on the feedback from the API’s image analysis, the AR application can be guided to provide similar or different outfit to the customer12.

According to MarketsandMarkets, a global market research company and consulting firm, the image recognition market is estimated to grow from USD 15.95 Billion in 2016 to USD 38.92 Billion by 2021, at a CAGR of 19.5% between 2016 and 2021. The future of image recognition seems very interesting.

0 Comments.